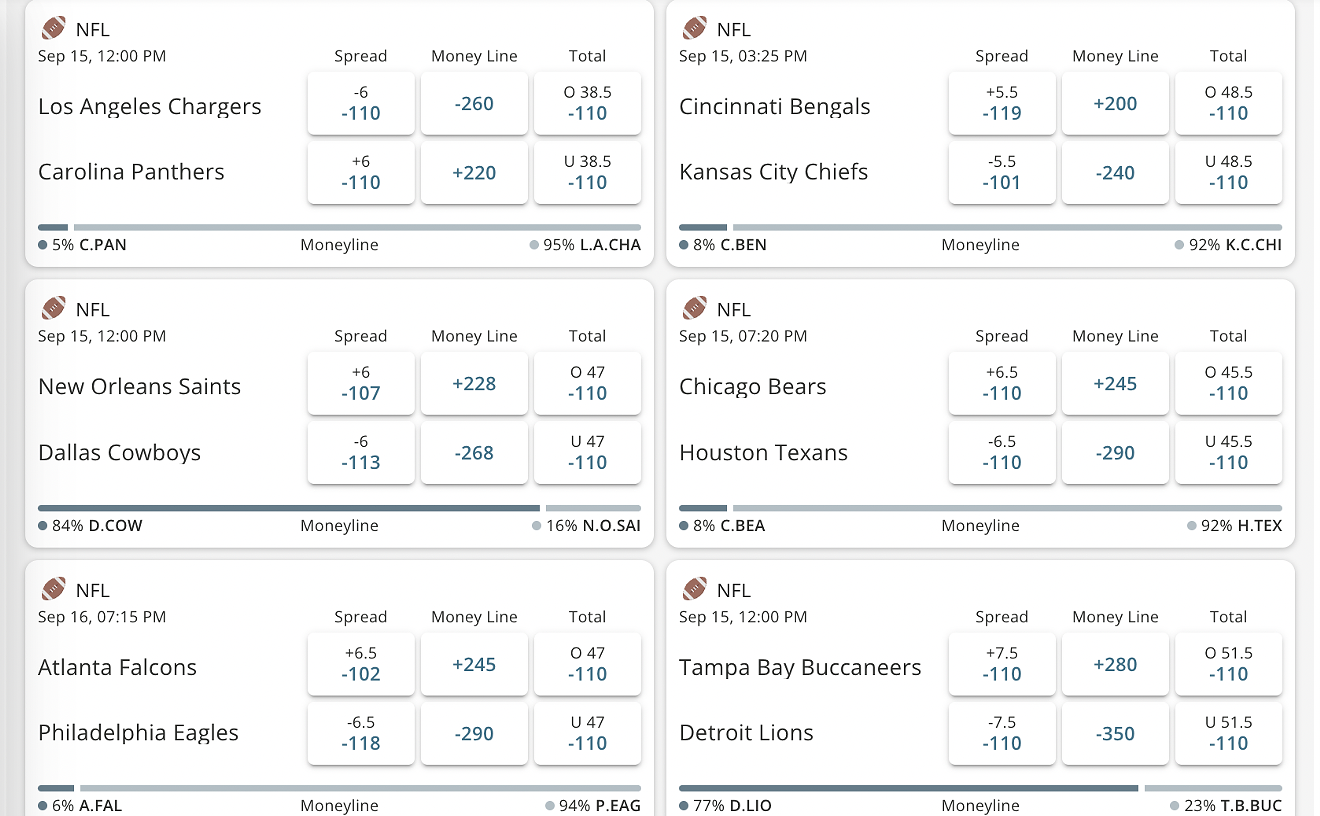

Texas House Rep. and former Mansfield Mayor David Cook recently posted a typical campaign message on X: "Yard signs are available." The post is punctuated by an innocuous smiley face emoji, and an accompanying image depicts an idyllic American home — with a yard too green to be in Texas — boasting a “David Cook for Texas House” sign.

If the too-perfect-to-be-real yard didn’t give the image away, the slightly-wonky stars and stripes decorating the home’s American flag might: the image is fake. It was created by an artificial intelligence image generator, which, with the help of a few keywords and descriptors, is able to invent images of varying levels of realism within moments.

As AI Image Generators grow in popularity and advance technologically, Americans are growing increasingly concerned about the impact they will have on our electoral system. A recent UChicago Harris/AP-NORC Poll found that 62% of Republicans and 70% of Democrats would support political candidates taking a pledge not to use AI in their campaigns.

It’s an unlikely ask, especially as a glance at former President Donald Trump’s social media accounts show that AI images have been a favorite tool of his for months, and in the build-up to November’s presidential election, more and more politically inclined accounts are turning to AI to help with political messaging. According to Darrell West, a Senior Fellow at the Brookings Institution in Washington, D.C., the potential impact of these images will depend on the intentions of the wielder.

The Good

In some cases, the effect of AI in politics has been positive. West, who researches the overlap between artificial intelligence and politics, has witnessed campaigns utilize AI models to track phone banking data or speed up communications. Some states are using the technology to help clean out voter rolls, although in some instances, the technology has been flagged as faulty.

“You used to need an army of people and a lot of money to run campaign ads and engage in voter outreach, and that's no longer the case,” West told the Observer. “We've essentially democratized technology by putting powerful tools in the hands of everyone. It's good in the sense that individuals or small organizations can communicate within the campaign just as well as large and wealthy organizations.”

DC4TX yard signs are going up in #HD96! Want to show some love for my re-election campaign? Drop us a note at the link below or shoot us an email to [email protected] to request a yard sign! 🙂 https://t.co/sPWT9QzNwl pic.twitter.com/0f4jE0mUOa

— David Cook (@DavidCookTexas) September 3, 2024

As was the case with Cook’s post: innocent messaging accompanied by an innocent image, the use of AI does little harm to anyone.

But what if his intentions hadn’t been so innocent?

The Bad

In the past few weeks you may have seen a photo of Trump riding majestically atop a lion. Or a photo of Trump cozying up with innocent-looking kittens and ducklings. Or maybe some combination of the two — like a photo of Trump riding (less majestically) atop a (less innocent-looking) cat with a massive rifle in hand, and an army of kittens surrounding him.

You may be scratching your head and wondering “Didn’t his campaign just make fun of cat people?” Here's a quick rundown to bring you up to speed: An unfounded right-wing conspiracy originating in Springfield, Ohio, claims that Haitian immigrants are eating park geese and neighborhood pets. In response, conservatives have crowned Trump the champion of cute and fuzzy things and are creating fake images of the former President snuggled up with kittens as fodder for anti-immigrant sentiment.

The trend went from zero to 100 in a matter of days, with some AI memes — like one of Trump being chased by Black men, kittens in his clutches — now reverting to outright racism. It’s an example of the more “nefarious” use AI can serve, West said.

— Donald J. Trump (@realDonaldTrump) August 18, 2024

“People are trying to mislead voters by putting false narratives out,” West said. “A few weeks ago, Trump [posted] an image of Kamala Harris addressing a communist rally with the hammer and sickle flag. That obviously never took place, but [it] plays to his narrative that she's a Marxist.”

West is concerned that realistic Artificial Intelligence images are “erasing the difference between truths and falsehoods.” In his own personal life, he has witnessed the difficulty people have discerning real from fake on social media.

“You can portray candidates in a false light by showing a candidate next to somebody who's very unpopular. I've seen pictures of Kamala Harris in a bathing suit hugging convicted sex offender Jeffrey Epstein,” West said. “That never took place, but the picture looked completely authentic. I saw people circulating that on Facebook and saying, ‘Hey, we should be voting for someone who supports child molestation?’ So I think we need to worry about false narratives that potentially could influence voters.”

The Ugly

In this campaign ad-style video posted by X owner Elon Musk, whose sphere of influence cannot be overstated, Artificial Intelligence was used to create a Kamala Harris-sounding VoiceOver that disparages Harris and President Joe Biden."I had four years under the tutelage of the ultimate Deep State Puppet, a wonderful mentor, Joe Biden," the fake-Harris states as the corresponding text scroll across the video.This is amazing 😂

— Elon Musk (@elonmusk) July 26, 2024

pic.twitter.com/KpnBKGUUwn

The video has been viewed over 136 million times.

Musk's interest in AI likely inspired the newest addition to the X platform, a generative AI chatbot known as Grok, which is in Beta testing. Musk describes the bot as "anti-woke." The application has been linked to the spread of election misinformation that was disseminated after Biden stepped down from his reelection campaign, which caused a group of Secretaries of State to flag the error. At first, Musk did not seem to mind, the Guardian reports.

After being urged to turn the bot nonpartisan in a letter signed by five secretaries of state, Musk complied. Still, the stint showed that when it comes to AI, the guardrails are few and far between.

"We are erasing the difference between truth and falsehoods," West said. "And that's the thing that actually could influence voters."